Your cart is currently empty!

Imec halves peak temperatures in 3D HBM-on-GPU AI chips

Imec has applied its system technology co-optimization (STCO) strategy to cut peak processor temperature in an HBM-on-GPU stack in half. Presented at this week’s IEEE International Electron Devices Meeting (IEDM) in San Francisco, the study demonstrates how stacking high-bandwidth memory directly on top of GPUs – a promising path for next-gen AI accelerators – can be made thermally viable through a mix of design tweaks on technology and system level.

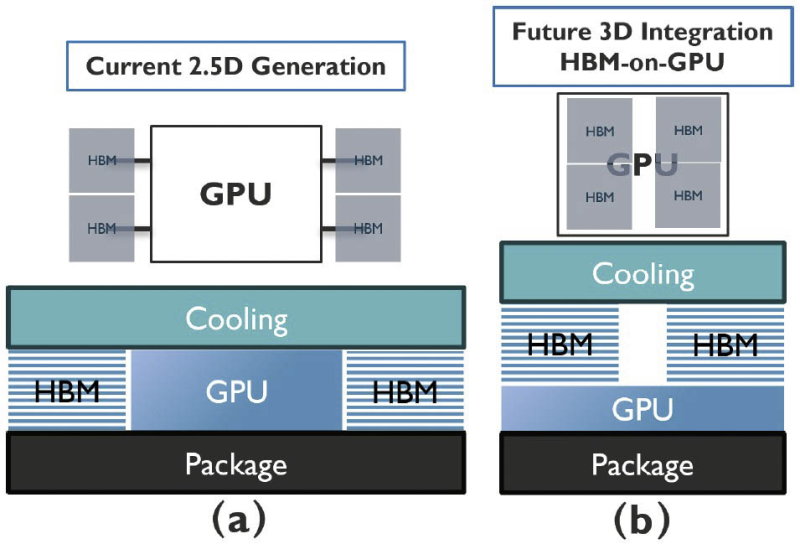

Currently, AI accelerators typically employ 2.5D integration, placing stacks of HBM around one or two GPUs on a silicon interposer. Stacking the memory on top of the processing units would greatly enhance the compute density, if only because it would allow for up to four GPUs in the same footprint.

Without mitigation, however, peak GPU temperatures in a 3D HBM-on-GPU architecture reach 141.7 degrees Celsius under realistic AI training conditions. That’s well beyond safe operating limits. Imec researchers brought that down to 70.8 degrees using STCO, roughly matching current 2.5D integration benchmarks. Techniques include merging HBM stacks, optimizing thermal silicon, double-sided cooling and frequency scaling. While halving the GPU clock led to a 28 percent performance drop, the overall package outperformed the 2.5D baseline thanks to a higher throughput density.