Augmented rather than artificial – and that’s a good thing

In the past year, year and a half, a lot has been said, written and claimed about artificial intelligence. It’s going to take over our lives, it’s going to take our jobs and it is going to make a lot of jobs superfluous. Of course, things are never that black and white, but there’s some truth in most claims for sure.

One of the areas where AI is said to have a lot of impact is in creational and creative jobs. That includes software development or at least the part where writing code is concerned. Since this is also a domain I was trained in many moons ago, and where I still work, it makes sense to have a look at what the impact could be.

Statistical analysis

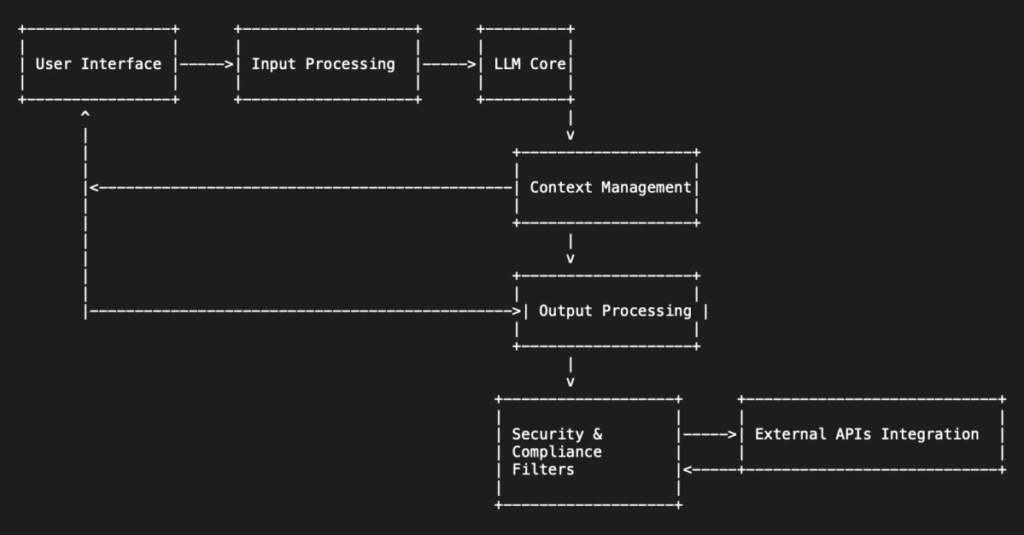

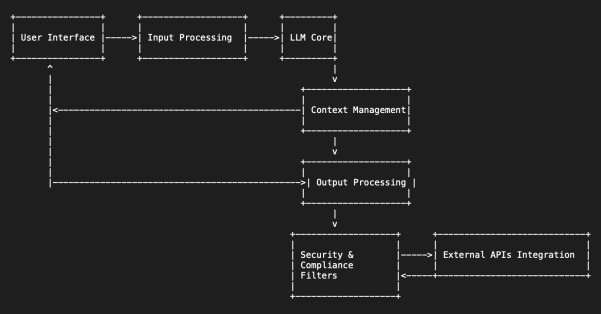

The current discussion started with ChatGPT, OpenAI’s “generative pre-trained transformer.” In the tool’s own words, that’s “a type of artificial intelligence model developed by OpenAI that’s designed to generate human-like text. The ‘generative’ part of GPT indicates that it can create content, the ‘pre-trained’ aspect means it’s been trained on a vast corpus of text before being fine-tuned for specific tasks and ‘transformer’ refers to the underlying architecture that the model is built upon, which is effective at handling sequences of data, such as language.”

In short, ChatGPT is a piece of software that’s trained by feeding it a lot of texts and that uses what it learned from these texts to try and respond to questions in a way that makes sense. This all builds on research in the field of natural language processing (NLP) and in essence is based on statistics. That is, statistics are used to determine what sequences of words or syllables make sense in a certain context (by finding them in texts). ChatGPT uses statistics, neural networks and deep learning to establish a starting point for ‘understanding and responding.’

There’s a lot of technical detail that needs to be explained to make it completely clear, but I’ll leave that to the experts. The key thing here is that ChatGPT can derive context from what it’s being asked and then use what it’s learned to respond in a way that (at least statistically) makes sense in that same context. Similar tools have been developed by others, like Meta, Microsoft and Google, with more human-friendly names like Bart, Bert, Roberta and Elmo. They’re grouped under the moniker LLM (Large Language Models), which has been the name applied to such language processing models for a long time already.

The approach is very powerful because it will help search for information. However, as we’ve seen in several publications on the internet, it also leads to so-called “hallucinations” – sometimes ChatGPT, but also its competitors, make up things that seem to make sense.

Although the models are continuously being improved to avoid mistakes, they’re still far from perfect. There was a time when asking ChatGPT to tell you 5 + 5 would initially yield 10 but adding that your wife says it’s 11 and claiming that she’s always right would change its mind. That no longer works. It isn’t always clear, though, whether this has been fixed in the model or the tool around it (let’s say the ‘chat’ part of ChatGPT).

In any case, the models are based on language analysis, context derivation and then generating answers. Whether or not that’s real intelligence is a big discussion all over the world. I’d still summarize it as a superset of statistical analysis because it won’t work without a huge amount of training data.

Experimenting with ChatGPT

Since ChatGPT (and also a bit before), the models are also being applied in software engineering, mostly in producing code. These solutions could be categorized as an AI-based subset of so-called productivity tools. Github’s Copilot, for example, is a tool that uses the same principles as ChatGPT specifically for software coding. It doesn’t get context just from the questions you ask it but also from the code you’re working on (the files you have open in your development environment and their content). This lets it easily automate repetitive tasks for a developer and answer questions on what could be the best solution for a problem, the best way to improve a piece of code or which parts of the code would be affected by a change in a specific function.

All of this is of course also available in other forms, but the way the models are constructed allows some things to be done in a way that fits exactly with your current work, instead of providing a generic solution. At least, that’s the claim. I haven’t done a lot with Copilot, because coding is only a small part of what I do, but it seems to work – also based on what I hear from others.

Another area where AI plays a role in software development is setting up a basic framework in a new programming language. There, the use is more focused on generating the actual code. At the moment, LLMs are trained with large data sets and if you want them to learn a programming language properly, they need to be fed a lot of programs in that language, as well as its documentation. To be able to generate production-ready code, they also would have to have ‘knowledge’ of good software engineering practices.

Right now, that’s a big limitation and even a risk. ChatGPT isn’t trained specifically in programming or a specific programming language, but it can help generate more or less working code. This is possible because a lot of code is available on ‘self-help’ websites for programming like Stack Overflow, which contains questions and answers, often accompanied by simplified examples, albeit not free of mistakes. More complete and reliable code, as found on repositories like Github and Gitlab, is likely not included in the training data (yet), so just like those websites, the correctness and reliability of code suggested by LLMs aren’t ensured. It requires manual work to check this, although tools like ChatGPT can also be used to debug and correct their own code suggestions.

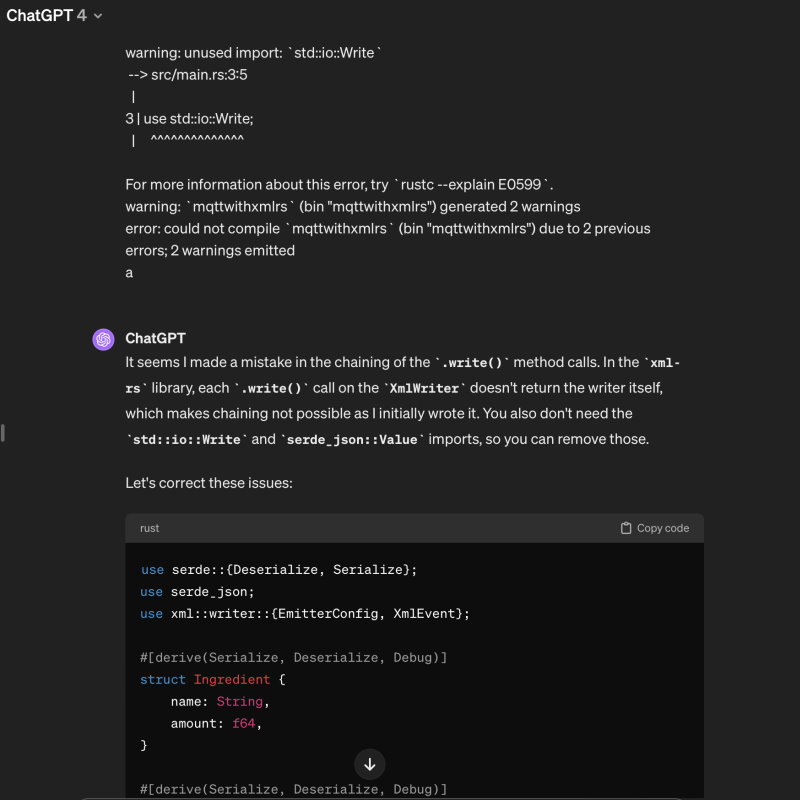

As an experiment, I asked ChatGPT a while ago to help me build a simple converter between an MQTT messaging system and Microsoft Message Queue (MSMQ), using the Rust programming language. In the process, I refined my requirements, and ChatGPT adjusted the code accordingly. A few times, I asked it to correct bugs found by the compiler and in all cases, it was able to do so. In about 1.5 hours, I got something I could use, although not quite ‘production ready’ as some error handling code was still missing. However, it did allow me to explain the principle of the converter to others and show the possible complications of developing a fully operational version.

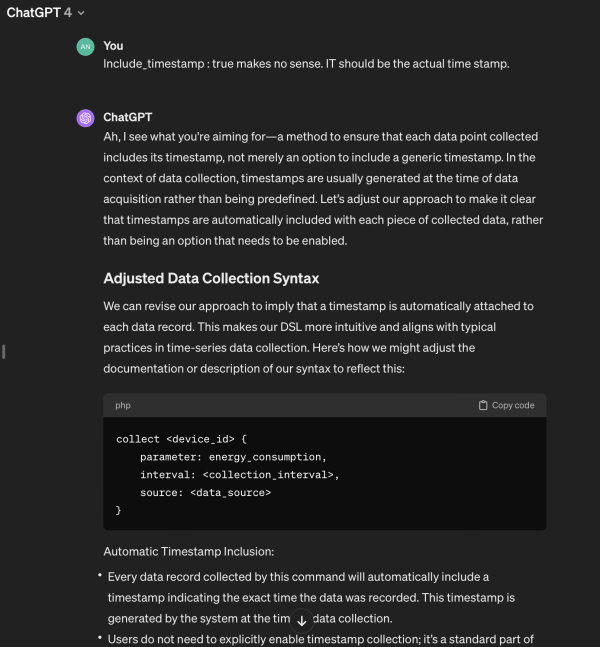

Finally, there’s the area of domain-specific languages (DSLs) and model-driven development. There, we try to raise the level of abstraction in software development to the point where end users or product owners can specify what they want in a less technical form than a programming language. Code can then be generated from their specifications. There’s been quite some success with that in the past, and there’s still a lot of development going on in this area.

Last year, I did some experiments with ChatGPT, asking it to accept my definitions of certain concepts and the relations between them. I then fed it short scenarios based on those same definitions and asked it to generate code for user interfaces or small controllers from these scenarios. That wasn’t extremely successful because of the same limitations on coding I mentioned before, but also not completely unsuccessful. I expect we’ll soon see new developments in this area as well.

Imagination and creativity

These are just three examples of what I and others have looked at over the past period. There’s no doubt that ChatGPT has brought to the spotlight something that’s been brewing for more than a few decades. After all, Eliza was already developed as a first attempt at AI in the 1960s.

The success of ChatGPT has sparked fear as well as opportunism. It certainly has made a lot of people question what the future will look like, also in software development. LLM-based tools can already help us with a lot of things by allowing us to do repetitive things faster and making complex things easier, but it will be a while before we can do without architects, designers and programmers. Although some people claim the opposite, the current tools have no consciousness, no imagination and no creativity, which are necessary ingredients for starting new developments.

At the same time, discussing software ideas not only with colleagues and customers but also with an LLM-based tool is an extra trigger to spark that imagination and creativity. Having such a tool open in your browser or on your phone all day and using it as a sparring partner when no human colleagues are around certainly comes in handy. Furthermore, if we limit the set of learning data to our own code and notes from the past or our digital library, this would create a tool that helps to quickly (re)discover solutions for today’s problems.

For these reasons, I like to refer to AI as “augmented intelligence,” an add-on to the developer’s brain.