IBM: the era of quantum utility is near

In the fall of 2019, Google researchers claimed the world’s first demonstration of quantum supremacy: their 53-qubit Sycamore quantum computer based on superconducting circuits took 200 seconds to perform a calculation that the researchers estimated would take a state-of-the-art supercomputer 10,000 days to complete.

That claim was challenged from two different angles. IBM said one of its powerful supercomputers could actually solve that problem in 2.5 days. That’s still significantly slower than the Sycamore, but it’s not unreasonable to suppose that a more efficient algorithm will be found to close the gap. To claim quantum supremacy, said IBM, you need to secure much more distance between the classical and the quantum calculation. That criticism proved to hold water. Last year, Chinese researchers were able to perform the calculation on a GPU-powered supercomputer in a little over 300 seconds.

Others, including IBM, were unhappy about the term itself. “Quantum supremacy” raises certain expectations, which clashes with the actual utility of Google’s demonstration. The randomly chosen sequence of instructions that Sycamore executed has no practical application and, in fact, seems carefully chosen to put the quantum computer at an advantage.

Experts still agree, however, that Google’s demonstration was a milestone: any way you slice it, it represented significant progress in quantum computing. In the quantum computer’s chronicle, it marks the first time a quantum computer took on a classical one and won, even if it looks like more modern hardware will eventually best it.

The next major milestone in that chronicle might be claimed by IBM itself. Hailed as something more useful but more modestly named, Big Blue’s researchers say their simulation of a magnetic material’s behavior signals that “we’re now entering a new era of utility for quantum computing,” says Darío Gil, director of IBM Research.

Extremely difficult

Quantum systems derive their power from the fact that n qubits represent 2n states and an operation can be applied to all states simultaneously. That’s why they’re ideally suited for plowing through large data sets – finding the needle in the haystack, so to speak. Unfortunately, qubits are fragile, producing errors that severely cut into quantum computers’ performance.

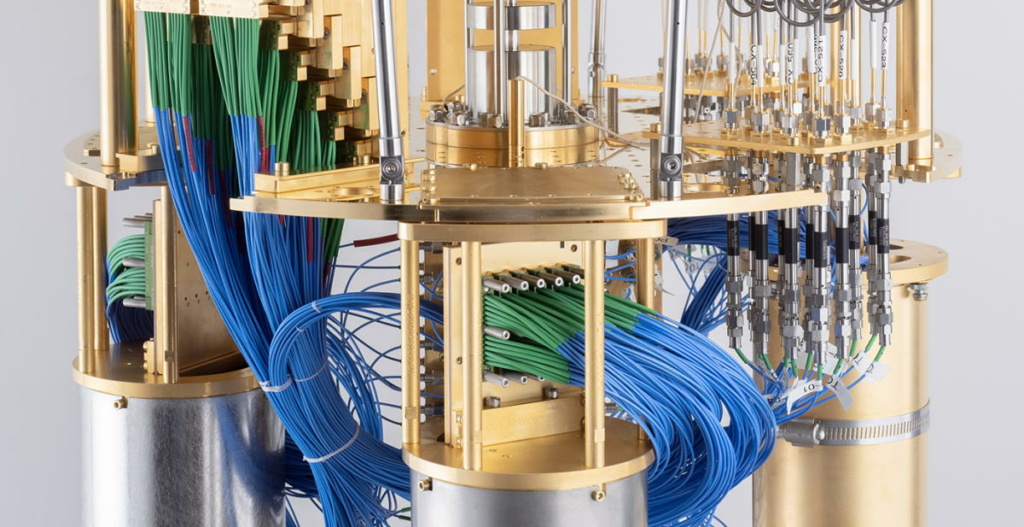

In its experiment, the IBM team demonstrated that a quantum computer can outperform leading classical simulations by learning and mitigating errors in the system. The researchers used the Quantum Eagle processor composed of 127 superconducting qubits to perform a ‘noisy’ calculation on the magnetic state of a two-dimensional solid. The researchers also measured the noise produced by each of the qubits.

As it turns out, the noise pattern is reasonably predictable, depending for example on the qubit’s position in the array or the presence of defects in the solid. The team then used these predictions to model what the results would have looked like without that noise. As the model was expanded, supercomputers at Lawrence Berkeley National Lab’s National Energy Research Scientific Computing Center (NERSC) and Purdue University couldn’t keep up.

Given the simplistic nature of the material’s model, the result still isn’t actually useful, but it does inspire confidence that quantum systems, through further scaling, will be able to simulate real-world systems in the not-so-distant future, says IBM. “To us, this milestone is a significant step in proving that today’s quantum computers are capable, scientific tools that can be used to model problems that are extremely difficult – and perhaps impossible – for classical systems,” says Gil.

The Eagle, launched in 2021, isn’t IBM’s most advanced quantum processor. That would be the 433-qubit Osprey introduced last year. IBM expects to break the 1000-qubit barrier later this year, which might allow for useful computations using error mitigation. Eventually, however, most companies expect to implement error-correcting techniques.

Main picture credit: IBM