The AI revolution isn’t being held back by energy, but by outdated thinking, argues Martijn Heck.

AI doesn’t have an energy problem. And no, I’m not referring to the fact that the trillions of euros invested in chips and data centers didn’t actually lead to operational facilities yet. I’m talking on a fundamental level. AI is leveraging the most advanced technology in the world: chips. This technology is improving by a factor of two every two years. We know this as Moore’s Law. And Moore’s Law isn’t dead. It’s bound to continue for the next two decades. The interesting part is that we also have Koomey’s Law, stating that energy use of computing goes down by at least a factor of two every two years. This means that AI and semicon technology can keep scaling exponentially, without any additional energy cost. None.

By 2030, energy consumption from AI data centers is expected to double compared to last year. That may sound dramatic. But consider this thought experiment: What if we launched ChatGPT-9 in 2031 instead of 2029? By simply delaying it two years, we wouldn’t double our AI energy footprint – we’d keep it steady. Some will argue that the economy urgently needs that extra boost in performance. But that’s a narrow view. By the same logic, if we look ahead over the next fifty years, we’d only need to slow performance growth by about 1.4 percent per year to avoid any increase in energy consumption. That’s a negligible difference. Greenpeace – and our new Dutch Prime Minister – can rest easy. Our AI systems would still grow exponentially in capability, just with a tiny bit more patience.

Unfortunately, we seem to have devolved to a 19th-century economic model, where we fall back on the archaic production factors of energy and capital. Cheap energy and an inflated monetary system enable a temporary boost in AI capacity. It’s not unlike a bunch of cavemen, going after each other with the largest club, in terms of sophistication.

This won’t scale. Unlike the number of transistors per chip, the number of data centers can’t scale exponentially. It’s a temporary boost, to gain market share. But is market share really that important when the very same data centers will be depreciated in three years, when a new generation of chips hits the market? Deepseek has shown that user commitment is rather volatile, so that seems unlikely.

Why this collective bubble-blowing? The 20th century wasn’t like that. When we think back on the rise of the semicon industry and the early internet in the 1990s, there was a feeling of technological progress. Of inventions. Of fine-tuning the most advanced industrial tools and processes to eventually establish the most advanced technology humanity has ever seen.

In the 21st century, we turned the internet into mostly a marketing tool, collecting and selling data, where global dominance is key. I think we can all agree that the business models of Booking.com, AirBNB, Uber and Justeattakeaway won’t deliver any Nobel Prizes for in-depth technology work. Most efforts focus on strengthening the position and pushing competition out.

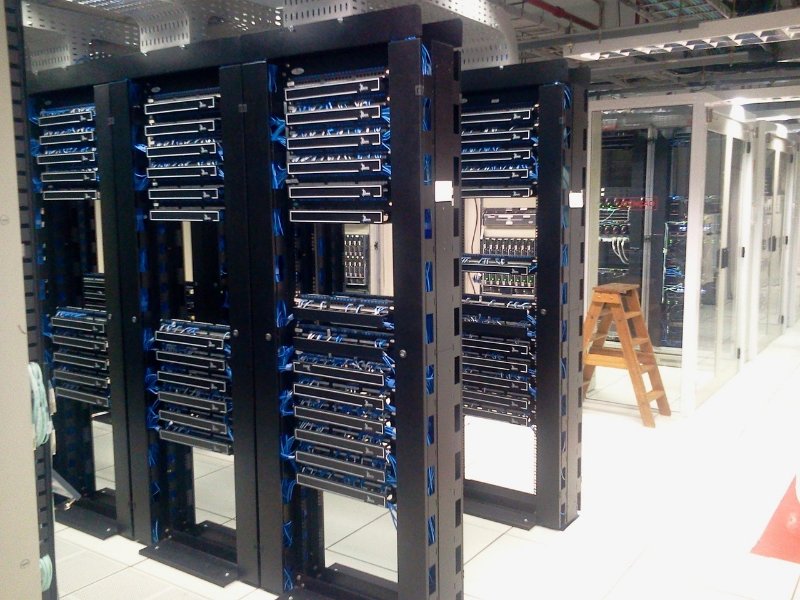

Unfortunately, AI appears to follow a similar path. Entrepreneurs, investors and politicians have forgotten the value of technology and have a sole focus on perceived market share. And they think waving around a money wand is the best way to do that. AI is, in fact, a deep technology, uniquely enabled by chips and advanced algorithms. Is building extra data centers really the only solution for growth? Let me throw in some numbers: Tens of millions of people work in the global energy industry. Nvidia has only 36,000 employees, OpenAI only 5,000. How do people not see that investing in deep-tech talent and companies is by far the best way to avoid an energy crisis and to attain leadership in the field of AI?

Maybe we have to help the economy a bit. What if we allocate a maximum energy allowance per AI company, much like we allocate finite bandwidth to telecom providers? Would that not be a major stimulus for deep tech? Let’s put some intelligence in AI. Maybe some wisdom, too.